In the aftermath of the U.S. banking crises of the 1930s, it became common for American economists to speak of the “inherent” instability of fractional-reserve banking and of the “perverse elasticity” of money supply in fractional-reserve banking systems.

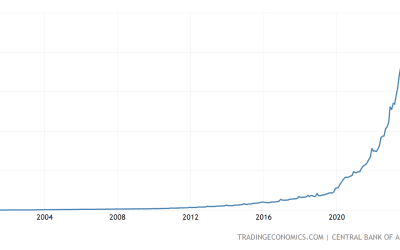

What the economists in question had in mind was the tendency in existing fractional reserve banking systems for any increase in the public’s preferred ratio of currency holdings to holdings of bank demand deposits to result in a decline in bank lending, and hence in a decline in the overall money stock, and to do so despite the lack of any decline in the public’s overall desire for money balances of one kind or another. It was chiefly owing to this phenomenon that, during the first few years of the Great Depression, the U.S. (M2) money stock collapsed to just two-thirds its pre-depression level.

It was in response to this supposedly inherent drawback of fractional reserve banking that several prominent economists—including Henry Simons, Irving Fisher, Loyd Mints, and (eventually) Milton Friedman—began offering or endorsing proposals for “100 Percent Money,” meaning money consisting either of basic money itself or of bank deposits fully backed by basic money. Although these proposals closely resembled later proposals for 100-percent reserve banking forwarded by Murray Rothbard and his Austrian-School followers, they differed in treating either fiat or “commodity-bundle” central bank money rather than either gold or silver as the ideal form of basic money, and also in not basing their arguments on any appeal to ethics: unlike their Austrian counterparts, the “Chicago” 100-percenters (for want of a more accurate designation) did not claim that fractional-reserve banks swindled their customers. Instead they condemned them solely for contributing to monetary instability.

But were the “Chicago” arguments for 100-percent money any sounder than their “Austrian” counterparts? Is it true that fractional reserve banking is “inherently unstable” in the manner they claimed? As even a careful reading of their own writings shows, it is not. In truth what the Chicago 100-percenters treated as an “inherent” problem of fractional reserve banking isn’t inherent to it at all. Instead it is a problem stemming from government regulations interfering with or altogether prohibiting banks from issuing their own circulating banknotes on the same terms as those by which they are allowed to create exchange media that consist of demand deposits. In the U.S., banknote issue has almost always been subject to special restrictions. But these restrictions became increasingly severe after the outbreak of the Civil War, finally culminating in the complete suppression of commercial banknotes in 1935. Consequently, on the eve of the Great Depression it was impossible for most commercial banks to issue any banknotes at all, and it was very difficult for the rest to do so. Banks therefore had to count heavily on the Fed to meet any considerable increase in the public’s demand for circulating money by creating more units of basic money. Otherwise the banks might be stripped of cash reserves, and forced either to severely contract their lending or to close their doors, if not to do both.

Had U.S. banks remained free to issue notes on the same terms, and subject to the same fractional-reserve requirements, as applied to their deposits, changes in the public’s demand for currency needn’t have had any such “perverse” consequences. On the contrary: so long as the terms are similar a bank has no reason to care what share of its outstanding IOUs consists of notes, and what share consists of circulating paper. The bank’s liquidity depends only on the sum of both sorts of IOUs, relative to its holdings of reserves of basic money. Whether “basic money” means gold or silver or claims against a central bank also doesn’t make any difference. Indeed, a bank might well prefer to have clients hold its notes, rather than keep deposits with it, since deposits sometimes bear interest, while notes do not. Confronted with a heavy demand for currency, a free bank happily disgorges more of its paper IOUs, while writing off some of its deposits. Because its reserves, reserve ratio, and liquidity all remain the same as before, it doesn’t have to stop lending, and therefore doesn’t contribute to any decline in the basic-money multiplier. In short, under free banking, in theory at least, an increased demand for currency doesn’t have any “perverse” consequences. Nor does it appear to have had any such consequences in fact in the relatively free banking systems of Scotland, Canada, and elsewhere. In these systems, although changes in the public’s preferred currency-deposit ratio happened frequently—the ratio always rose during the harvest season, for one thing—credit crunches and banking crises were extremely uncommon.

When, on the other hand, banks aren’t free to issue their own notes, or can do so only to a very limited extent, they have no choice but to satisfy customers’ demand for more currency by handing over scarce reserves, and thereby reducing their liquidity. To restore that liquidity, they must then restrict their lending, which, other things equal, means reducing the banking-system money-multiplier. If the central bank in turn fails to make compensating additions to the stock of basic money, the equilibrium money stock must decline. In the U.S., in the early 30s, the public’s preferred overall currency-to-deposit ratio rose sharply, while the Fed for the most part stood by. Hence the Great (Monetary) Contraction.

Some will object to my suggestion that freedom of note issue would have avoided the Great Contraction by noting that currency withdrawals at the time, instead of being routine withdrawals such as were then still typical of the harvest season, reflected distrust of the banks. In that case, a bank’s own notes might have been considered no safer than its deposits, so that central bank money would have been preferred to either. But while the notes of certain banks would undoubtedly have been distrusted, there’s no evidence that banknotes would have generally fallen out of favor: plenty of banks remained both trusted and solvent, and their notes could have supplied the needs of the country as a whole, since notes (unlike bank deposits) can travel wherever they are most wanted. The sole exception to this occurred in late February 1933, when a general run broke out. But this was, as Barry Wigmore has shown, actually a run for gold based, not on any general loss of confidence in the nations’ commercial banks, but on (justified) fears that the incoming president would devalue the dollar.

Further evidence that freedom of note issue would have helped comes from the one step taken in that direction during the banking crisis. This consisted of a rider to the Federal Home Loan Bank Act known as the “Glass-Borah Law.” That law briefly and modestly relaxed the regulatory limits on national banks’ ability to issue national banknotes, by extending the sorts of collateral the banks could have backing those notes. The Glass-Borah Law resulted in a substantial and much needed increase in the supply of currency, although it still left the banks too restricted to avoid massive reserve losses. Just how far a more generous measure, including steps to allow state as well as national banks to issue their own currency, might have gone in limiting or even preventing the crisis is a question crying out for further research.

Why, then, did “Chicago” 100-percenters insist on treating the “perverse” behavior of the U.S. money stock in the 30s as a problem inherent to fractional-reserve banking rather than as a consequence of government regulation? As their own writings make clear, at least some of them actually admitted that freedom of note issue was a theoretical solution to the problem. In his A Program for Monetary Stability (1960, p. 69), for instance, Milton Freidman observed:

To keep changes in the form in which the public holds its cash [that is, money] balances from affecting the amount there is to be held, the conditions of issue must be the same for currency and deposits. . . . The first solution would involve permitting banks to issue currency as well as deposits subject to the same fractional reserve requirements and to restrict what is presently high-powered money to use as bank reserves.

Yet Friedman went on to reject this solution in favor of the 100-percent reserve alternative. He did so because he believed then that freedom of note issue itself raised insuperable problems. This stance, which was presumably shared by other “Chicago” 100-percenters, was itself due to misinterpretation of the American “free banking” experience. Friedman himself eventually came to admit his mistake in light of research by modern free bankers (see Friedman and Schwartz 1986), though his reversal was somewhat half-hearted (see my “Milton Friedman and the Case Against Currency Monopoly”). Whether Simons or Mints or Fisher or any of the other “Chicago” 100-percenters would ever have done likewise is of course something we shall never know.